Schedule

The influence of the new technologies on data architectures (Dutch spoken)

The rules used to be that data architectures had to be designed independently of the technologies and products; first, design the data architecture and then select the right products. This was achievable because many products were reasonably interchangeable. But is that still possible? In recent years we have been confronted with an unremitting stream of technologies for processing, analyzing and storing data, such as Hadoop, NoSQL, NewSQL, GPU-databases, Spark and Kafka. These technologies have a major impact on data processing architectures, such as data warehouses and streaming applications. But most importantly, many of these products have very unique internal architectures and directly enforce certain data architectures. So, can we still develop a technology independent data architecture? In this session the potential influence of the new technologies on data architectures is explained.

- Are we stuck in our old ideas about data architecture?

- From generic to specialized technologies

- Examples of technologies that enforce a certain data architecture

- What is the role of software generators in this discussion?

- New technology can only be used optimally if the data architecture is geared to it.

How Three Enterprises Turned Their Big Data into Their Biggest Asset

Organizations worldwide are facing the challenge of effectively analyzing their exponentially growing data stores. Most data warehouses were designed before the big data explosion, and struggle to support modern workloads. To make due, many companies are cutting down on their data pipelines, severely limiting the productivity of data professionals.

This session will explore the case studies of three organizations who used the power of GPU to broaden their queries, analyze significantly more data, and extract previously unobtainable insights.

Read lessData Modelstorming: From Business Models to Analytical Models

Have you ever been disappointed with the results of traditional data requirements gathering, especially for BI and data analytics? Ever wished you could ‘cut to the chase’ and somehow model the data directly with the people who know it and want to use it. However, that’s not a realistic alternative, is it? Business people don’t do data modeling! But what if that wasn’t the case?

In this lively session Lawrence Corr shares his favourite collaborative modeling techniques – popularized in books such as ‘Business Model Generation’ and ‘Agile Data Warehouse Design’ – for successfully engaging stakeholders using BEAM (Business Event Analysis and Modeling) and the Business Model Canvas for value-driven BI requirements gathering and star schema design. Learn how visual thinking, narrative, 7Ws and lots of Post-it ™ notes can get your stakeholders thinking dimensionally and capturing their own data requirements with agility.

This session will cover:

- The whys of data modelstorming: Why it’s different from traditional data modeling and why we need it

- Drawing user-focused data models: alternatives to entity relation diagrams for visualising data opportunities

- Using BEAM (Business Event Analysis and Modeling) to discover key data sources and define rich data sets

- How making toast can encourage collaborative modeling within your organisation

- Modelstorming templates which you can download and start using straight away.

The modern database eco-system, the world is changing quickly (Dutch spoken)

Cloud-based services, in-memory databases, and massive parallel (ML) database applications are predominant in the BI marketing hype nowadays.

But, what has really changed over the last decade in the DBMS products being offered? What players are riding the technology curve successfully? Should we worry about the impact of new hardware such as GPU and non-volatile memory? And, should we rely on programmers to reinvent the wheel for each and every database interaction? What are the hot technologies brewing in the kitchen of database companies?

A few topics we will cover in more detail:

- Column stores, a de-facto standard for BI pioneered in the Netherlands

- From Hadoop to Apache Spark, when to consider pulling your credit card

- Breaking the walls between DBMS and application languages Java/C/..

- Performance, more than just a benchmark number

- Resource provisioning to save money.

Becoming Data Driven – A Data Strategy for Success & Business Insight

More enterprises are seeking to transform themselves into data-driven, digitally based organisations. Many have recognised that this will not be solely achieved by acquiring new technologies and tools. Instead they are aware that becoming data-driven requires a holistic transformation of existing business models, involving culture change, process redesign and re-engineering, and a step change in data management capabilities.

To deliver this holistic transformation, creating and delivering a coherent and overarching data strategy is essential. Becoming data-driven requires a plan which spells out what an organisation must do to achieve its data transformational goals. A data strategy can be critical in answering questions such as: How ready are we to become data-driven? What data do we need to focus on, now and in the future? What problems and opportunities should we tackle first and why? What part does business intelligence and data warehousing have to play in a data strategy? How do we assess a data strategy’s success?

This session will outline how to produce a data strategy and supporting roadmap, and how to ensure that it becomes a living and agile blueprint for change rather than a statement of aspiration.

This session will cover:

- The relationship between an organisation’s business strategy and data strategy

- What a data strategy is (and is not)

- Building & delivering a data strategy – the key components and steps

- The role of BI/DW in a data strategy – data issues and data needs

- The ‘limit or liberate’ data dilemma and how to resolve it through data governance

- Several use cases of successful data strategies and lessons learned.

Model Deployment for Production & Adoption - Why the Last Task Should be the First Discussed

Most analytic modelers wait until after they’ve built a model to consider deployment. Doing so practically ensures project failure. Their motivations are typically sincere but misplaced. In many cases, analysts want to first ensure that there is something worth deploying. However, there are very specific design issues that must be resolved before meaningful data exploration, data preparation and modeling can begin. The most obvious of many considerations to address ahead of modeling is whether senior management truly desires a deployed model. Perhaps the perceived purpose of the model is insight and not deployment at all. There is a myth that a model that manages to provide insight will also have the characteristics desirable in a deployed model. It is simply not true. No one benefits from this lack of foresight and communication. This session will convey imperative preparatory considerations to arrive at accountable, deployable and adoptable projects and Keith will share carefully chosen project design case studies and how deployment is a critical design consideration.

- Which modeling approach continues to be the most common and important in machine learning

- The iterative process from exploration to modeling to deployment

- Which team members should be consulted in the earliest stages of predictive analytics project design?

- Misconceptions about predictive analytics, modeling, and deployment

- Costly strategic design errors to avoid in predictive analytics projects

- Common styles of deployment.

Adopting Machine Learning at Scale

Jan Veldsink (Lead Artificial Intelligence and Cognitive Technologies at Rabobank) will explain how to get the organization right for Machine Learning projects. In large organizations the access to and the use of all the right and relevant data can be challenging. In this presentation Jan will explain how to overcome the problems that arrise, amd how to organize the development cycle, from development to test to deployment, and beyond Agile. Also he will show how he has used BigML and how the audience can fit BigML in their strategy. As humans we also learn from examples so in this talk he will show some of the showcases or real projects in the financial crime area.

Read lessAgile Methods and Data Warehousing: How to Deliver Faster

Most people will agree that data warehousing and business intelligence projects take too long to deliver tangible results. Often by the time a solution is in place, the business needs have changed. With all the talk about Agile development methods like SCRUM and Extreme Programming, the question arises as to how these approaches can be used to deliver data warehouse and business intelligence projects faster. This presentation will look at the 12 principles behind the Agile Manifesto and see how they might be applied in the context of a data warehouse project. The goal is to determine a method or methods to get a more rapid (2-4 weeks) delivery of portions of an enterprise data warehouse architecture. Real world examples with metrics will be discussed.

- What are the original 12 principles of Agile

- How can they be applied to DW/BI projects

- Real world examples of successful application of the principles.

Opening by the chairman

The influence of the new technologies on data architectures (Dutch spoken)

The rules used to be that data architectures had to be designed independently of the technologies and products; first, design the data architecture and then select the right products. This was achievable because many products were reasonably interchangeable. But is that still possible? In recent years we have been confronted with an unremitting stream of technologies for processing, analyzing and storing data, such as Hadoop, NoSQL, NewSQL, GPU-databases, Spark and Kafka. These technologies have a major impact on data processing architectures, such as data warehouses and streaming applications. But most importantly, many of these products have very unique internal architectures and directly enforce certain data architectures. So, can we still develop a technology independent data architecture? In this session the potential influence of the new technologies on data architectures is explained.

- Are we stuck in our old ideas about data architecture?

- From generic to specialized technologies

- Examples of technologies that enforce a certain data architecture

- What is the role of software generators in this discussion?

- New technology can only be used optimally if the data architecture is geared to it.

How Three Enterprises Turned Their Big Data into Their Biggest Asset

Organizations worldwide are facing the challenge of effectively analyzing their exponentially growing data stores. Most data warehouses were designed before the big data explosion, and struggle to support modern workloads. To make due, many companies are cutting down on their data pipelines, severely limiting the productivity of data professionals.

This session will explore the case studies of three organizations who used the power of GPU to broaden their queries, analyze significantly more data, and extract previously unobtainable insights.

Read lessData Modelstorming: From Business Models to Analytical Models

Have you ever been disappointed with the results of traditional data requirements gathering, especially for BI and data analytics? Ever wished you could ‘cut to the chase’ and somehow model the data directly with the people who know it and want to use it. However, that’s not a realistic alternative, is it? Business people don’t do data modeling! But what if that wasn’t the case?

In this lively session Lawrence Corr shares his favourite collaborative modeling techniques – popularized in books such as ‘Business Model Generation’ and ‘Agile Data Warehouse Design’ – for successfully engaging stakeholders using BEAM (Business Event Analysis and Modeling) and the Business Model Canvas for value-driven BI requirements gathering and star schema design. Learn how visual thinking, narrative, 7Ws and lots of Post-it ™ notes can get your stakeholders thinking dimensionally and capturing their own data requirements with agility.

This session will cover:

- The whys of data modelstorming: Why it’s different from traditional data modeling and why we need it

- Drawing user-focused data models: alternatives to entity relation diagrams for visualising data opportunities

- Using BEAM (Business Event Analysis and Modeling) to discover key data sources and define rich data sets

- How making toast can encourage collaborative modeling within your organisation

- Modelstorming templates which you can download and start using straight away.

The modern database eco-system, the world is changing quickly (Dutch spoken)

Cloud-based services, in-memory databases, and massive parallel (ML) database applications are predominant in the BI marketing hype nowadays.

But, what has really changed over the last decade in the DBMS products being offered? What players are riding the technology curve successfully? Should we worry about the impact of new hardware such as GPU and non-volatile memory? And, should we rely on programmers to reinvent the wheel for each and every database interaction? What are the hot technologies brewing in the kitchen of database companies?

A few topics we will cover in more detail:

- Column stores, a de-facto standard for BI pioneered in the Netherlands

- From Hadoop to Apache Spark, when to consider pulling your credit card

- Breaking the walls between DBMS and application languages Java/C/..

- Performance, more than just a benchmark number

- Resource provisioning to save money.

Becoming Data Driven – A Data Strategy for Success & Business Insight

More enterprises are seeking to transform themselves into data-driven, digitally based organisations. Many have recognised that this will not be solely achieved by acquiring new technologies and tools. Instead they are aware that becoming data-driven requires a holistic transformation of existing business models, involving culture change, process redesign and re-engineering, and a step change in data management capabilities.

To deliver this holistic transformation, creating and delivering a coherent and overarching data strategy is essential. Becoming data-driven requires a plan which spells out what an organisation must do to achieve its data transformational goals. A data strategy can be critical in answering questions such as: How ready are we to become data-driven? What data do we need to focus on, now and in the future? What problems and opportunities should we tackle first and why? What part does business intelligence and data warehousing have to play in a data strategy? How do we assess a data strategy’s success?

This session will outline how to produce a data strategy and supporting roadmap, and how to ensure that it becomes a living and agile blueprint for change rather than a statement of aspiration.

This session will cover:

- The relationship between an organisation’s business strategy and data strategy

- What a data strategy is (and is not)

- Building & delivering a data strategy – the key components and steps

- The role of BI/DW in a data strategy – data issues and data needs

- The ‘limit or liberate’ data dilemma and how to resolve it through data governance

- Several use cases of successful data strategies and lessons learned.

Model Deployment for Production & Adoption - Why the Last Task Should be the First Discussed

Most analytic modelers wait until after they’ve built a model to consider deployment. Doing so practically ensures project failure. Their motivations are typically sincere but misplaced. In many cases, analysts want to first ensure that there is something worth deploying. However, there are very specific design issues that must be resolved before meaningful data exploration, data preparation and modeling can begin. The most obvious of many considerations to address ahead of modeling is whether senior management truly desires a deployed model. Perhaps the perceived purpose of the model is insight and not deployment at all. There is a myth that a model that manages to provide insight will also have the characteristics desirable in a deployed model. It is simply not true. No one benefits from this lack of foresight and communication. This session will convey imperative preparatory considerations to arrive at accountable, deployable and adoptable projects and Keith will share carefully chosen project design case studies and how deployment is a critical design consideration.

- Which modeling approach continues to be the most common and important in machine learning

- The iterative process from exploration to modeling to deployment

- Which team members should be consulted in the earliest stages of predictive analytics project design?

- Misconceptions about predictive analytics, modeling, and deployment

- Costly strategic design errors to avoid in predictive analytics projects

- Common styles of deployment.

Adopting Machine Learning at Scale

Jan Veldsink (Lead Artificial Intelligence and Cognitive Technologies at Rabobank) will explain how to get the organization right for Machine Learning projects. In large organizations the access to and the use of all the right and relevant data can be challenging. In this presentation Jan will explain how to overcome the problems that arrise, amd how to organize the development cycle, from development to test to deployment, and beyond Agile. Also he will show how he has used BigML and how the audience can fit BigML in their strategy. As humans we also learn from examples so in this talk he will show some of the showcases or real projects in the financial crime area.

Read lessAgile Methods and Data Warehousing: How to Deliver Faster

Most people will agree that data warehousing and business intelligence projects take too long to deliver tangible results. Often by the time a solution is in place, the business needs have changed. With all the talk about Agile development methods like SCRUM and Extreme Programming, the question arises as to how these approaches can be used to deliver data warehouse and business intelligence projects faster. This presentation will look at the 12 principles behind the Agile Manifesto and see how they might be applied in the context of a data warehouse project. The goal is to determine a method or methods to get a more rapid (2-4 weeks) delivery of portions of an enterprise data warehouse architecture. Real world examples with metrics will be discussed.

- What are the original 12 principles of Agile

- How can they be applied to DW/BI projects

- Real world examples of successful application of the principles.

Opening by the chairman

Addressing Organizational Resistance to Predictive Analytics and Machine Learning

Many who work within organizations that are in the early stages of their digital transformation are surprised when an accurate model — built with good intentions and capable of producing measurable benefit to the organization — faces organizational resistance. No veteran modeler is surprised by this because all projects face some organizational resistance to some degree. This predictable and eminently manageable problem simply requires attention during the project’s design phase. Proper design will minimize resistance and most projects will proceed to their natural conclusion – deployed models that provide measurable and purposeful benefit to the organization. Keith will share carefully chosen case studies based upon real world projects that reveal why organizational resistance was a problem and how it was addressed.

- Typical reasons why organizational resistance arises.

- Identifying and prioritizing valid opportunities that align with organizational priorities

- Which teams members should be consulted early in the project design to avoid resistance

- How to estimate ROI during the design phases and achieve ROI in the validation phase

- The importance of a ‘dress rehearsal’ prior to going live.

Combining AI and BI in a dynamic data landscape

Business teams are raising the bar on Business Intelligence and Datawarehouse support. BI competence centers and data managers have to respond to expanding requirements: offer more data, more insight, maximal quality and accuracy, ensuring appropriate governance, etc. All to create guidance for enhancing their business. The promise of new technologies such as Artificial Intelligence is attracting increased business interest and stimulates data-driven innovation and accelerated development of smarter applications. Data Scientist teams grow, and can take over the lead from BI competence centers.

– How should such developments, which make sense from a business improvement perspective, be supported by data management activity?

– How to control privacy and create an effective data governance strategy?

– It becomes more challenging to design appropriate data warehouses, BI functionality and data access control when business interests change frequently and application development evolves rapidly, driven by “AI initiatives”.

This session will review techniques and technology for effective (meta) data management and smarter BI for widening data landscapes. We will elaborate the details for an appropriate governance approach supporting advanced Business Intelligence and Insight Exploration functions.

Read lessData Warehousing in Today and Beyond

The world of data warehousing has changed! With the advent of Big Data, Streaming Data, IoT, and The Cloud, what is a modern data management professional to do? It may seem to be a very different world with different concepts, terms, and techniques. Or is it? Lots of people still talk about having a data warehouse or several data marts across their organization. But what does that really mean today? How about the Corporate Information Factory (CIF), the Data Vault, an Operational Data Store (ODS), or just star schemas? Where do they fit now (or do they)? And now we have the Extended Data Warehouse (XDW) as well. How do all these things help us bring value and data-based decisions to our organizations? Where do Big Data and the Cloud fit? Is there a coherent architecture we can define? This talk will endeavor to cut through the hype and the buzzword bingo to help you figure out what part of this is helpful. I will discuss what I have seen in the real world (working and not working!) and a bit of where I think we are going and need to go in today and beyond.

- What are the traditional/historical approaches

- What have organizations been doing recently

- What are the new options and some of their benefits.

Maak uw BI-project succesvol met Data-Driven Storytelling (Dutch spoken)

Al jaren bestaat de wereld van Business Intelligence (BI) uit het bouwen van rapporten en dashboards. De BI-wereld om ons heen verandert echter snel. (Statistical) Analytics worden meer en meer ingezet, elke student krijgt gedegen R-training en het gebruik van data verplaatst zich van IT naar business. Maar zijn we wel klaar voor deze nieuwe werkwijze? Zijn we in staat om de nieuw verkregen inzichten te delen? En kunnen we echt het onderbuikgevoel van het management veranderen?

Tijdens deze presentatie gaan we in op deze veranderende wereld. We gaan in op hoe we het data-driven storytelling proces kunnen toepassen binnen BI-projecten, welke rollen zijn hiervoor nodig en u krijgt handvatten om nieuw verkregen inzichten te communiceren via storytelling.

• Inzicht in het Data-driven storytelling process

• Visuele data exploratie

• Organisatorische wijzigingen

• Communiceren via Infographics

• Combineren van data, visualisatie en een verhaal.

Data Quality & BI/DW – Not yet a marriage made in heaven

The close links between data quality and business intelligence & data warehousing (BI/DW) have long been recognised. Their relationship is symbiotic. Robust data quality is a keystone for successful BI/DW; BI/DW can highlight data shortcomings and drive the need for better data quality. A key driver for the invention of data warehouses was that they would improve the integrity of the data they store and process.

Despite this close bond between these data disciplines, their marriage has not always been a successful one. Our industry is littered with failed BI/DW projects, with an inability to tackle and resolve underlying data quality issues often cited as a primary reason for failure. Today many analytics and data science projects are also failing to meet their goals for the same reason.

Why has the history of BI/DW been plagued with an inability to build and sustain the solid data quality foundation it needs? This presentation tackles these issues and suggests how BI/DW and data quality can and must support each other. The Ancient Greeks understood this. We must do the same.

This session will address:

- What is data quality and why is it the core of effective data management?

- What can happen when it goes wrong – business and BI/DW implications

- The synergies between data quality and BI/DW

- Traditional approaches to tackling data quality for DW / BI

- The shortcomings of these approaches in today’s BI/DW world

- New approaches for tackling today’s data quality challenges

- Several use cases of organisations who have successfully tackled data quality & the key lessons learned.

De nieuwe business intelligence wereld: van Batch naar Lambda en Kappa (Dutch spoken)

Met de komst van cloud computing is het mogelijk geworden om data sneller te verwerken en infrastructuur mee te laten schalen met de benodigde opslag en cpu capaciteit. Waar voorheen batch computing de norm was en grote datawarehouses werden ontwikkeld, zien we een transitie naar data lakes en real-time verwerking. Eerst kwam de lambda architectuur die naast de batch processing een streaming processing layer toevoegde. En sinds 2014 zien we dat de kappa architectuur de batch processing layer uit de lambda architectuur helemaal weglaat.

Deze presentatie gaat in op Kappa architecturen. Wat zijn de voor- en nadelen van het verwerken van data langs deze architectuur ten opzichte van de oude batch verwerking of de tussentijdse lambda architectuur? Dit vraagstuk zal worden behandeld aan de hand van ervaringen bij KPN met een product gebaseerd op een Kappa architectuur: de Data Services Hub.

Centraal bij de beantwoording staan de aspecten die tegenwoordig worden toebedeeld aan innovatieve technologieën: homogenisatie en ontkoppeling, modulariteit, connectiviteit, programmeerbaarheid en het kunnen profiteren van ‘gebruikers’ sporen.

- Creëren van informatie en kennis uit data

- Hoe verhouden dashboarding en machine-learning in een streaming context zich ten opzichte van de oude meer bekende batch processing way of working?

- Wat betekenen MQTT, Pulsar, Spark en Flink?

- De waarde van centrale pub-sub message bussen, zoals Kafka of Rabbit MQ.

Taking data management automation to the next level

Following up on its successful predecessor we are happy to announce the release of Quipu 4.0. We’re taking things a step further by introducing the next level in data management automation using patterns as guiding principle. Making data warehouse automation, data migration, big data applications and similar projects much faster and easier. Together with customers we can develop and add new building blocks fast, putting customer requirements first. In this presentation we highlight our vision and invite you to be part of our development initiative.

Read lessDeveloping your own Enterprise Data Marketplace (Dutch spoken)

We have known public data marketplaces for a long time. These are environments that provide all kinds of data products that can be purchased or used. In recent years, organizations have started to develop their own data marketplace: the enterprise data marketplace. An EDM is developed by its own organization and supplies data products to internal and external data consumers. Examples of data products are reports, data services, data streams, batch files, etcetera. The essential difference between an enterprise data warehouse and an enterprise data marketplace is that with the former users are asked what they need and with the latter it is assumed that the marketplace owners know what the users need. Or in other words, we go from demand-driven to supply-driven. This all sounds easy, but it isn’t at all. In this session, the challenges of developing your own enterprise data marketplace are discussed.

- Challenges: research, development, marketing, selling, payment method

- Is special technology needed for developing a data marketplace?

- Differences between data warehouses and marketplaces

- Including a data marketplace in a unified data fabric

- The importance of a searchable data catalog.

Opening by the chairman

Addressing Organizational Resistance to Predictive Analytics and Machine Learning

Many who work within organizations that are in the early stages of their digital transformation are surprised when an accurate model — built with good intentions and capable of producing measurable benefit to the organization — faces organizational resistance. No veteran modeler is surprised by this because all projects face some organizational resistance to some degree. This predictable and eminently manageable problem simply requires attention during the project’s design phase. Proper design will minimize resistance and most projects will proceed to their natural conclusion – deployed models that provide measurable and purposeful benefit to the organization. Keith will share carefully chosen case studies based upon real world projects that reveal why organizational resistance was a problem and how it was addressed.

- Typical reasons why organizational resistance arises.

- Identifying and prioritizing valid opportunities that align with organizational priorities

- Which teams members should be consulted early in the project design to avoid resistance

- How to estimate ROI during the design phases and achieve ROI in the validation phase

- The importance of a ‘dress rehearsal’ prior to going live.

Combining AI and BI in a dynamic data landscape

Business teams are raising the bar on Business Intelligence and Datawarehouse support. BI competence centers and data managers have to respond to expanding requirements: offer more data, more insight, maximal quality and accuracy, ensuring appropriate governance, etc. All to create guidance for enhancing their business. The promise of new technologies such as Artificial Intelligence is attracting increased business interest and stimulates data-driven innovation and accelerated development of smarter applications. Data Scientist teams grow, and can take over the lead from BI competence centers.

– How should such developments, which make sense from a business improvement perspective, be supported by data management activity?

– How to control privacy and create an effective data governance strategy?

– It becomes more challenging to design appropriate data warehouses, BI functionality and data access control when business interests change frequently and application development evolves rapidly, driven by “AI initiatives”.

This session will review techniques and technology for effective (meta) data management and smarter BI for widening data landscapes. We will elaborate the details for an appropriate governance approach supporting advanced Business Intelligence and Insight Exploration functions.

Read lessData Warehousing in Today and Beyond

The world of data warehousing has changed! With the advent of Big Data, Streaming Data, IoT, and The Cloud, what is a modern data management professional to do? It may seem to be a very different world with different concepts, terms, and techniques. Or is it? Lots of people still talk about having a data warehouse or several data marts across their organization. But what does that really mean today? How about the Corporate Information Factory (CIF), the Data Vault, an Operational Data Store (ODS), or just star schemas? Where do they fit now (or do they)? And now we have the Extended Data Warehouse (XDW) as well. How do all these things help us bring value and data-based decisions to our organizations? Where do Big Data and the Cloud fit? Is there a coherent architecture we can define? This talk will endeavor to cut through the hype and the buzzword bingo to help you figure out what part of this is helpful. I will discuss what I have seen in the real world (working and not working!) and a bit of where I think we are going and need to go in today and beyond.

- What are the traditional/historical approaches

- What have organizations been doing recently

- What are the new options and some of their benefits.

Maak uw BI-project succesvol met Data-Driven Storytelling (Dutch spoken)

Al jaren bestaat de wereld van Business Intelligence (BI) uit het bouwen van rapporten en dashboards. De BI-wereld om ons heen verandert echter snel. (Statistical) Analytics worden meer en meer ingezet, elke student krijgt gedegen R-training en het gebruik van data verplaatst zich van IT naar business. Maar zijn we wel klaar voor deze nieuwe werkwijze? Zijn we in staat om de nieuw verkregen inzichten te delen? En kunnen we echt het onderbuikgevoel van het management veranderen?

Tijdens deze presentatie gaan we in op deze veranderende wereld. We gaan in op hoe we het data-driven storytelling proces kunnen toepassen binnen BI-projecten, welke rollen zijn hiervoor nodig en u krijgt handvatten om nieuw verkregen inzichten te communiceren via storytelling.

• Inzicht in het Data-driven storytelling process

• Visuele data exploratie

• Organisatorische wijzigingen

• Communiceren via Infographics

• Combineren van data, visualisatie en een verhaal.

Data Quality & BI/DW – Not yet a marriage made in heaven

The close links between data quality and business intelligence & data warehousing (BI/DW) have long been recognised. Their relationship is symbiotic. Robust data quality is a keystone for successful BI/DW; BI/DW can highlight data shortcomings and drive the need for better data quality. A key driver for the invention of data warehouses was that they would improve the integrity of the data they store and process.

Despite this close bond between these data disciplines, their marriage has not always been a successful one. Our industry is littered with failed BI/DW projects, with an inability to tackle and resolve underlying data quality issues often cited as a primary reason for failure. Today many analytics and data science projects are also failing to meet their goals for the same reason.

Why has the history of BI/DW been plagued with an inability to build and sustain the solid data quality foundation it needs? This presentation tackles these issues and suggests how BI/DW and data quality can and must support each other. The Ancient Greeks understood this. We must do the same.

This session will address:

- What is data quality and why is it the core of effective data management?

- What can happen when it goes wrong – business and BI/DW implications

- The synergies between data quality and BI/DW

- Traditional approaches to tackling data quality for DW / BI

- The shortcomings of these approaches in today’s BI/DW world

- New approaches for tackling today’s data quality challenges

- Several use cases of organisations who have successfully tackled data quality & the key lessons learned.

De nieuwe business intelligence wereld: van Batch naar Lambda en Kappa (Dutch spoken)

Met de komst van cloud computing is het mogelijk geworden om data sneller te verwerken en infrastructuur mee te laten schalen met de benodigde opslag en cpu capaciteit. Waar voorheen batch computing de norm was en grote datawarehouses werden ontwikkeld, zien we een transitie naar data lakes en real-time verwerking. Eerst kwam de lambda architectuur die naast de batch processing een streaming processing layer toevoegde. En sinds 2014 zien we dat de kappa architectuur de batch processing layer uit de lambda architectuur helemaal weglaat.

Deze presentatie gaat in op Kappa architecturen. Wat zijn de voor- en nadelen van het verwerken van data langs deze architectuur ten opzichte van de oude batch verwerking of de tussentijdse lambda architectuur? Dit vraagstuk zal worden behandeld aan de hand van ervaringen bij KPN met een product gebaseerd op een Kappa architectuur: de Data Services Hub.

Centraal bij de beantwoording staan de aspecten die tegenwoordig worden toebedeeld aan innovatieve technologieën: homogenisatie en ontkoppeling, modulariteit, connectiviteit, programmeerbaarheid en het kunnen profiteren van ‘gebruikers’ sporen.

- Creëren van informatie en kennis uit data

- Hoe verhouden dashboarding en machine-learning in een streaming context zich ten opzichte van de oude meer bekende batch processing way of working?

- Wat betekenen MQTT, Pulsar, Spark en Flink?

- De waarde van centrale pub-sub message bussen, zoals Kafka of Rabbit MQ.

Taking data management automation to the next level

Following up on its successful predecessor we are happy to announce the release of Quipu 4.0. We’re taking things a step further by introducing the next level in data management automation using patterns as guiding principle. Making data warehouse automation, data migration, big data applications and similar projects much faster and easier. Together with customers we can develop and add new building blocks fast, putting customer requirements first. In this presentation we highlight our vision and invite you to be part of our development initiative.

Read lessDeveloping your own Enterprise Data Marketplace (Dutch spoken)

We have known public data marketplaces for a long time. These are environments that provide all kinds of data products that can be purchased or used. In recent years, organizations have started to develop their own data marketplace: the enterprise data marketplace. An EDM is developed by its own organization and supplies data products to internal and external data consumers. Examples of data products are reports, data services, data streams, batch files, etcetera. The essential difference between an enterprise data warehouse and an enterprise data marketplace is that with the former users are asked what they need and with the latter it is assumed that the marketplace owners know what the users need. Or in other words, we go from demand-driven to supply-driven. This all sounds easy, but it isn’t at all. In this session, the challenges of developing your own enterprise data marketplace are discussed.

- Challenges: research, development, marketing, selling, payment method

- Is special technology needed for developing a data marketplace?

- Differences between data warehouses and marketplaces

- Including a data marketplace in a unified data fabric

- The importance of a searchable data catalog.

Agile Data Warehouse Design

Agile techniques emphasise the early and frequent delivery of working software, stakeholder collaboration, responsiveness to change and waste elimination. They have revolutionised application development and are increasingly being adopted by DW/BI teams. This course provides practical tools and techniques for applying agility to the design of DW/BI database schemas – the earliest needed and most important working software for BI.

The course contrasts agile and non-agile DW/BI development and highlights the inherent failings of traditional BI requirements analysis and data modeling. Via class room sessions and team exercises attendees will discover how modelstorming (modeling + brainstorming) data requirements directly with BI stakeholders overcomes these limitations.

Learning objectives

You will learn how to:

You will learn how to:

- Model BI requirements with BI stakeholders using inclusive tools and visual thinking techniques

- Rapidly translate BI requirements into efficient, flexible data warehouse designs

- Identify and solve common BI problems – before they occur – using dimensional design patterns

- Plan, design and incrementally develop BI solutions with agility

Who Should Attend

- Business and IT professionals who want to develop better BI solutions faster.

- Business analysts, scrum masters, data modelers/architects, DBA’s and application developers, new to DW/BI, will benefit from the solid grounding in dimensional modeling provided.

- Experienced DW/BI practitioners will find the course updates their hard-earned industry knowledge with the latest ideas on agile modeling, data warehouse design patterns and business model innovation.

You receive a free copy of the book Agile Data Warehouse Design by Lawrence Corr.

Course description

Day 1: Modelstorming – Agile BI Requirements Gathering

Agile Dimensional Modeling Fundamentals

- BI/DW design requirements, challenges and opportunities: the need for agility

- Modeling with BI stakeholders: the case for collaborative data modeling

- Modeling for measurement: the case for dimensional modeling, star schemas, facts & dimensions

- Thinking dimensional using the 7Ws (who, what, when, where, how many, why & how)

- Business Event Analysis and Modeling (BEAM✲): an agile approach to dimensional modeling

Dimensional Modelstorming Tools

- Data stories, themes and BEAM✲ tables: modeling BI data requirements by example

- Timelines: modeling time and process measurement

- Hierarchy charts: modeling dimensional drill-downs and rollups

- Change stories: capturing historical reporting requirements (slowly changing dimension rules)

- Storyboarding the data warehouse design: matrix planning and estimating for agile BI development

- The Business Model Canvas: aligning DW/BI design with business model definition and innovation

- The BI Model Canvas: a systematic approach to BI & star schema design

Day 2: Agile Star Schema Design

Star Schema Design

- Test-driven design: agile/lean data profiling for validating and improving requirements models

- Data warehouse reuse: identifying, defining and developing conformed dimensions and facts

- Balancing ‘just enough design up front’ (JEDUF) and ‘just in time’ (JIT) data modeling

- Designing flexible, high performance star schemas: maximising the benefits of surrogate keys

- Refactoring star schemas: responding to change, dealing with data debt

- Lean (minimum viable) DW documentation: enhanced star schemas, DW matrix

How Much/How Many: Designing facts, measures and KPIs (Key Performance Indicators)

- Fact types: transactions, periodic snapshots, accumulating snapshots

- Fact additivity: additive, semi-additive and non-additive measures

- Fact performance and usability: indexing, partitioning, aggregating and consolidating facts

Day 3: Dimensional Design Patterns

Who & What dimension patterns: customers, employees, products and services

- Large populations with rapidly changing dimensional attributes: mini-dimensions & customer facts

- Customer segmentation: business to business (B2B), business to consumer (B2C) dimensions

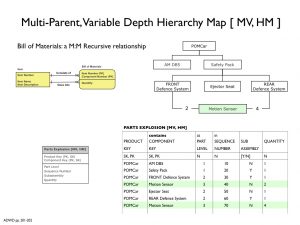

- Recursive customer relationships and organisation structures: variable-depth hierarchy maps

- Current and historical reporting perspectives: hybrid slowly changing dimensions

- Mixed business models: heterogeneous products/services, diverse attribution, ragged hierarchies

- Product and service decomposition: component (bill of materials) and product unbundling analysis

When & Where dimension patterns: dates, times and locations

- Flexible date handling, ad-hoc date ranges and year-to-date analysis

- Modeling time as dimensions and facts

- Multinational BI: national languages reporting, multiple currencies, time zones & national calendars

- Understanding journeys and trajectories: modeling events with multiple geographies

Why & How dimension patterns: cause and effect

- Causal factors: trigging events, referrals, promotions, weather and exception reason dimensions

- Fact specific dimensions: transaction and event status descriptions

- Multi-valued dimensions: bridge tables, weighting factors, impact and ‘correctly weighted’ analysis

- Behaviour Tagging: modeling causation and outcome, dimensional overloading, step dimensions

Putting Machine Learning to Work

Supervised learning solves modern analytics challenges and drives informed organizational decisions. Although the predictive power of machine learning models can be very impressive, there is no benefit unless they inform value-focused actions. Models must be deployed in an automated fashion to continually support decision making for residual impact. And while unsupervised methods open powerful analytic opportunities, they do not come with a clear path to deployment. This course will clarify when each approach best fits the business need and show you how to derive value from both approaches.

Regression, decision trees, neural networks – along with many other supervised learning techniques – provide powerful predictive insights when historical outcome data is available. Once built, supervised learning models produce a propensity score which can be used to support or automate decision making throughout the organization. We will explore how these moving parts fit together strategically.

Unsupervised methods like cluster analysis, anomaly detection, and association rules are exploratory in nature and don’t generate a propensity score in the same way that supervised learning methods do. So how do you take these models and automate them in support of organizational decision-making? This course will show you how.

This course will demonstrate a variety of examples starting with the exploration and interpretation of candidate models and their applications. Options for acting on results will be explored. You will also observe how a mixture of models including business rules, supervised models, and unsupervised models are used together in real world situations for various problems like insurance and fraud detection.

You Will Learn

- When to apply supervised versus unsupervised modeling methods

- Options for inserting machine learning into the decision making of your organization

- How to use multiple models for estimation and classification

- Effective techniques for deploying the results of unsupervised learning

- Interpret and monitor your models for continual improvement

- How to creatively combine supervised and unsupervised models for greater performance

Who is it for?

Analytic Practitioners, Data Scientists, IT Professionals, Technology Planners, Consultants, Business Analysts, Analytic Project Leaders.

Topics Covered

1. Model Development Introduction

Current Trends in AI, Machine Learning and Predictive Analytics

- Algorithms in the News: Deep Learning

- The Modeling Software Landscape

- The Rise of R and Python: The Impact on Modeling and Deployment

- Do I Need to Know About Statistics to Build Predictive Models?

2. Strategic and Tactical Considerations in Binary Classification

- What’s is an Algorithm?

- Is a “Black Box” Algorithm an Option for Me?

- Issues Unique to Classification Problems

- Why Classification Projects are So Common

- Why are there so many Algorithms?

3. Data Preparation for Supervised Models

- Data Preparation Law

- Integrate Data Subtasks

- Aggregations: Numerous Options

- Restructure: Numerous Options

- Data Construction

- Ratios and Deltas

- Date Math

- Extract Subtask

4. The Tasks of the Model Phase

- Optimizing Data for Different Algorithms

- Model Assessment

- Evaluate Model Results

- Check Plausibility

- Check Reliability

- Model Accuracy and Stability

- Lift and Gains Charts

- Evaluate Model Results

- Modeling Demonstration

- Assess Model Viability

- Select Final Models

- Why Accuracy and Stability are Not Enough

- What to Look for in Model Performance

- Exercise Breakout Session

- Select Final Models

- Create & Document Modeling Plan

- Determine Readiness for Deployment

- What are Potential Deployment Challenges for Each Candidate Model?

5. What is Unsupervised Learning?

- Clustering

- Association Rules

- Why most organizations utilize unsupervised methods poorly

- Case Study 1: Finding a new opportunity

- Case Studies 2, 3, and 4: How do supervised and unsupervised work together

- Exercise Breakout Session: Pick the right approach for each case study

- Data Preparation for Unsupervised

- The importance of standardization

- Running an analysis directly on transactional data

- Unsupervised Algorithms:

- Hierarchical Clustering

- K-means

- Self-Organizing Maps

- K Nearest Neighbors

- Association Rules

- Interpreting Unsupervised

- Exercise Breakout Session: Which value of K is best?

- Choosing the right level of granularity

- Reporting unsupervised results

6. Wrap-up and Next Steps

- Supplementary Materials and Resources

- Conferences and Communities

- Get Started on a Project!

- Options for Implementation Oversight and Collaborative Development

Agile Data Warehouse Design

Agile techniques emphasise the early and frequent delivery of working software, stakeholder collaboration, responsiveness to change and waste elimination. They have revolutionised application development and are increasingly being adopted by DW/BI teams. This course provides practical tools and techniques for applying agility to the design of DW/BI database schemas – the earliest needed and most important working software for BI.

The course contrasts agile and non-agile DW/BI development and highlights the inherent failings of traditional BI requirements analysis and data modeling. Via class room sessions and team exercises attendees will discover how modelstorming (modeling + brainstorming) data requirements directly with BI stakeholders overcomes these limitations.

Learning objectives

You will learn how to:

You will learn how to:

- Model BI requirements with BI stakeholders using inclusive tools and visual thinking techniques

- Rapidly translate BI requirements into efficient, flexible data warehouse designs

- Identify and solve common BI problems – before they occur – using dimensional design patterns

- Plan, design and incrementally develop BI solutions with agility

Who Should Attend

- Business and IT professionals who want to develop better BI solutions faster.

- Business analysts, scrum masters, data modelers/architects, DBA’s and application developers, new to DW/BI, will benefit from the solid grounding in dimensional modeling provided.

- Experienced DW/BI practitioners will find the course updates their hard-earned industry knowledge with the latest ideas on agile modeling, data warehouse design patterns and business model innovation.

You receive a free copy of the book Agile Data Warehouse Design by Lawrence Corr.

Course description

Day 1: Modelstorming – Agile BI Requirements Gathering

Agile Dimensional Modeling Fundamentals

- BI/DW design requirements, challenges and opportunities: the need for agility

- Modeling with BI stakeholders: the case for collaborative data modeling

- Modeling for measurement: the case for dimensional modeling, star schemas, facts & dimensions

- Thinking dimensional using the 7Ws (who, what, when, where, how many, why & how)

- Business Event Analysis and Modeling (BEAM✲): an agile approach to dimensional modeling

Dimensional Modelstorming Tools

- Data stories, themes and BEAM✲ tables: modeling BI data requirements by example

- Timelines: modeling time and process measurement

- Hierarchy charts: modeling dimensional drill-downs and rollups

- Change stories: capturing historical reporting requirements (slowly changing dimension rules)

- Storyboarding the data warehouse design: matrix planning and estimating for agile BI development

- The Business Model Canvas: aligning DW/BI design with business model definition and innovation

- The BI Model Canvas: a systematic approach to BI & star schema design

Day 2: Agile Star Schema Design

Star Schema Design

- Test-driven design: agile/lean data profiling for validating and improving requirements models

- Data warehouse reuse: identifying, defining and developing conformed dimensions and facts

- Balancing ‘just enough design up front’ (JEDUF) and ‘just in time’ (JIT) data modeling

- Designing flexible, high performance star schemas: maximising the benefits of surrogate keys

- Refactoring star schemas: responding to change, dealing with data debt

- Lean (minimum viable) DW documentation: enhanced star schemas, DW matrix

How Much/How Many: Designing facts, measures and KPIs (Key Performance Indicators)

- Fact types: transactions, periodic snapshots, accumulating snapshots

- Fact additivity: additive, semi-additive and non-additive measures

- Fact performance and usability: indexing, partitioning, aggregating and consolidating facts

Day 3: Dimensional Design Patterns

Who & What dimension patterns: customers, employees, products and services

- Large populations with rapidly changing dimensional attributes: mini-dimensions & customer facts

- Customer segmentation: business to business (B2B), business to consumer (B2C) dimensions

- Recursive customer relationships and organisation structures: variable-depth hierarchy maps

- Current and historical reporting perspectives: hybrid slowly changing dimensions

- Mixed business models: heterogeneous products/services, diverse attribution, ragged hierarchies

- Product and service decomposition: component (bill of materials) and product unbundling analysis

When & Where dimension patterns: dates, times and locations

- Flexible date handling, ad-hoc date ranges and year-to-date analysis

- Modeling time as dimensions and facts

- Multinational BI: national languages reporting, multiple currencies, time zones & national calendars

- Understanding journeys and trajectories: modeling events with multiple geographies

Why & How dimension patterns: cause and effect

- Causal factors: trigging events, referrals, promotions, weather and exception reason dimensions

- Fact specific dimensions: transaction and event status descriptions

- Multi-valued dimensions: bridge tables, weighting factors, impact and ‘correctly weighted’ analysis

- Behaviour Tagging: modeling causation and outcome, dimensional overloading, step dimensions

Putting Machine Learning to Work

Supervised learning solves modern analytics challenges and drives informed organizational decisions. Although the predictive power of machine learning models can be very impressive, there is no benefit unless they inform value-focused actions. Models must be deployed in an automated fashion to continually support decision making for residual impact. And while unsupervised methods open powerful analytic opportunities, they do not come with a clear path to deployment. This course will clarify when each approach best fits the business need and show you how to derive value from both approaches.

Regression, decision trees, neural networks – along with many other supervised learning techniques – provide powerful predictive insights when historical outcome data is available. Once built, supervised learning models produce a propensity score which can be used to support or automate decision making throughout the organization. We will explore how these moving parts fit together strategically.

Unsupervised methods like cluster analysis, anomaly detection, and association rules are exploratory in nature and don’t generate a propensity score in the same way that supervised learning methods do. So how do you take these models and automate them in support of organizational decision-making? This course will show you how.

This course will demonstrate a variety of examples starting with the exploration and interpretation of candidate models and their applications. Options for acting on results will be explored. You will also observe how a mixture of models including business rules, supervised models, and unsupervised models are used together in real world situations for various problems like insurance and fraud detection.

You Will Learn

- When to apply supervised versus unsupervised modeling methods

- Options for inserting machine learning into the decision making of your organization

- How to use multiple models for estimation and classification

- Effective techniques for deploying the results of unsupervised learning

- Interpret and monitor your models for continual improvement

- How to creatively combine supervised and unsupervised models for greater performance

Who is it for?

Analytic Practitioners, Data Scientists, IT Professionals, Technology Planners, Consultants, Business Analysts, Analytic Project Leaders.

Topics Covered

1. Model Development Introduction

Current Trends in AI, Machine Learning and Predictive Analytics

- Algorithms in the News: Deep Learning

- The Modeling Software Landscape

- The Rise of R and Python: The Impact on Modeling and Deployment

- Do I Need to Know About Statistics to Build Predictive Models?

2. Strategic and Tactical Considerations in Binary Classification

- What’s is an Algorithm?

- Is a “Black Box” Algorithm an Option for Me?

- Issues Unique to Classification Problems

- Why Classification Projects are So Common

- Why are there so many Algorithms?

3. Data Preparation for Supervised Models

- Data Preparation Law

- Integrate Data Subtasks

- Aggregations: Numerous Options

- Restructure: Numerous Options

- Data Construction

- Ratios and Deltas

- Date Math

- Extract Subtask

4. The Tasks of the Model Phase

- Optimizing Data for Different Algorithms

- Model Assessment

- Evaluate Model Results

- Check Plausibility

- Check Reliability

- Model Accuracy and Stability

- Lift and Gains Charts

- Evaluate Model Results

- Modeling Demonstration

- Assess Model Viability

- Select Final Models

- Why Accuracy and Stability are Not Enough

- What to Look for in Model Performance

- Exercise Breakout Session

- Select Final Models

- Create & Document Modeling Plan

- Determine Readiness for Deployment

- What are Potential Deployment Challenges for Each Candidate Model?

5. What is Unsupervised Learning?

- Clustering

- Association Rules

- Why most organizations utilize unsupervised methods poorly

- Case Study 1: Finding a new opportunity

- Case Studies 2, 3, and 4: How do supervised and unsupervised work together

- Exercise Breakout Session: Pick the right approach for each case study

- Data Preparation for Unsupervised

- The importance of standardization

- Running an analysis directly on transactional data

- Unsupervised Algorithms:

- Hierarchical Clustering

- K-means

- Self-Organizing Maps

- K Nearest Neighbors

- Association Rules

- Interpreting Unsupervised

- Exercise Breakout Session: Which value of K is best?

- Choosing the right level of granularity

- Reporting unsupervised results

6. Wrap-up and Next Steps

- Supplementary Materials and Resources

- Conferences and Communities

- Get Started on a Project!

- Options for Implementation Oversight and Collaborative Development

Limited time?

Can you only attend one day? It is possible to attend only the first or only the second conference day and of course the full conference. The presentations by our speakers have been selected in such a way that they can stand on their own. This enables you to attend the second conference day even if you did not attend the first (or the other way around).

Speakers

Rick van der Lans

Keith McCormick

Kent Graziano

Lawrence Corr

Nigel Turner

Lex Pierik

Rutger Rienks

Gold and Platinum Partners

Exhibitors & Media partners

“Good quality content from experienced speakers. Loved it!”

Rotterdam University of Applied Sciences

“As always a string of relevant subjects and topics.”

Het Consultancyhuis

“Longer sessions created room for more depth and dialogue. That is what I appreciate about this summit.”

Erasmus MC

“Inspiring summit with excellent speakers, covering the topics well and from different angles. Organization and venue: very good!”

The Hague Municipality

“Inspiring and well-organized conference. Present-day topics with many practical guidelines, best practices and do's and don'ts regarding information architecture such as big data, data lakes, data virtualisation and a logical data warehouse.”

Closesure

“A fun event and you learn a lot!”

Centric

“As a BI Consultant I feel inspired to recommend this conference to everyone looking for practical tools to implement a long term BI Customer Service.”

iConsultancy

“Very good, as usual!”

biim